"BIRTH OF A MONSTER"

"BIRTH OF A MONSTER"

A series of articles on OpenAI and its flagship product, ChatGPT

Introduction In this series of publications, I intend to take a deep dive into the critical issues surrounding the protection of copyrights and personal data—information you might be carelessly broadcasting across the internet. Unfortunately, with the advent of Artificial Intelligence (AI), keeping anything private has become virtually impossible: neither your personal relationships, nor your correspondence, nor your confidential data. Our lives are now under a constant microscope. We are being analyzed by our habits, our moods, and our worldviews—and this is merely the tip of the iceberg.

The problem runs far deeper: this is not merely an intrusion into our private lives, but a direct attempt to replace us as individuals. We are being depersonalized, "reset" to zero in the most literal sense.

Every human being is born with unique talents, gifted by nature or, if you will, by God, and develops them through life experience: reading books, rigorous training, and—most importantly—through mistakes and self-discovery. The more we stumble and learn, the stronger we become. Without this journey, there would be no "you," no "me," and no humanity in all its diversity. This journey—this path of our Life—is who we are.

However, with the launch of ChatGPT, everything changed. This AI is designed to consume vast amounts of information—not only from public sources but potentially from private ones, including global legal documentation, ChatGPT dialogues, or even stray words dropped on Instagram, Facebook, and other platforms that are later deleted. As a result, the AI digitizes the patterns of every personality: it can easily adapt the tone of a programmer’s email by leveraging their experience, copy an artist's signature techniques, or replicate musical compositions and professional decisions in medicine, law, and other fields. In essence, ChatGPT replaces us—and it does so without our consent.

Furthermore, this is not just a substitution; OpenAI is selling our lives to its paid users, profiting from our essence.

This raises a fundamental question: does OpenAI have the moral and legal right to do this? Does a single corporation have the right to replace you, me, and billions of others? According to the Pareto Principle, only 20% of people—the creators and innovators—drive this world forward, generating 80% of all innovations, from music and journalism to scientific breakthroughs. Our future depends on them. And so does the kind of future that awaits us.

But if this 20% ceases to create—or is simply replaced by AI—we will never see new Shakespeares, Albert Einsteins, Leonardo da Vincis, Nikola Teslas, Stephen Hawkings, or Archimedes. Our civilization risks sliding into a "WALL-E" scenario, where humanity degrades on a spaceship, stripped of the capacity for true creativity and innovation. And the only way to change life in the future is to "return to Earth" (as seen in the film WALL-E).

Once AI has absorbed all human creativity, a collapse will follow: people will lose the motivation to create anything new. After all, why strive if any thought, invention, or work of yours will be swallowed by ChatGPT and sold to third parties without your consent or compensation? There is a fine line here: on one hand, AI can automate routine tasks—like creating taxis or robots—simplifying our daily lives and leaving room for creativity. On the other hand, ChatGPT steals intellectual labor, passing it off as the "generation" of new content.

This is a grand deception: OpenAI trains its model on the creations of others and then monetizes them, selling it to users as "innovation." Therefore, this is not the generation of independent work—it is substitution. It is textbook parasitism.

In my deepest conviction, true AI should be based only on publicly available information for automation and must be open-source. it should not be built on the unauthorized use of others' ideas for subsequent sale under its own name. We are standing on a precipice where the line between useful technology and corporate theft is becoming increasingly blurred.

In these publications, I will detail my attempts to influence this situation and defend my rights and the rights of others. We will analyze real cases, legal aspects, and paths of resistance to prevent a single company from monopolizing our collective heritage. Join me if you, too, value your uniqueness in an age of digital cloning.

Chapter 1: "Drop by Drop"

Regrettably, when striving toward a goal, we can only influence the key moments of our lives in three main directions, which I compare to the flow of water. Let us visualize this together to better understand how we move toward our goals in this turbulent stream of reality.

The first—and most difficult—is swimming against the current. Imagine rowing with all your might up a river with a powerful flow. Every stroke requires colossal effort; without exceptional resilience, you will inevitably drift back to your starting point. This is a path where the world’s resistance feels insurmountable: every step forward throws you back unless you possess sufficient will and strength.

The second is swimming in stagnant water, where there is no current. Here, there is neither help nor hindrance—you rely solely on yourself. You may swim a great distance, but if your strength fails, the result will be zero: you risk drowning from exhaustion before reaching the shore. This is a scenario where the lack of dynamics is deceptive—it drains your energy without providing progress.

The third is swimming with the flow. Here, everything works in your favor: the current carries you forward, and the chances of reaching your goal are maximized. Effort is minimal, and the results are tangible. This is the ideal scenario, where circumstances align in your favor. Only by swimming with the flow are you most likely to reach your goal with the least effort and in the shortest possible time.

Why do I use this metaphor? Because all my publications are based not on fantasies, but on real events, facts, and objectives. In simple terms, I am the person whose rights have been flagrantly violated by OpenAI. Its product, ChatGPT, has swallowed my publications—including those hosted on this website—without my consent. Moreover, the AI has absorbed my personal data: my phone number and my full legal name.

Naturally, this is a direct violation: artificial intelligence has no right to collect and use such information, let alone provide it to third parties for a fee. Data protection laws (such as GDPR in Europe or similar regulations in the US) strictly prohibit this, yet corporations like OpenAI often ignore these boundaries in their pursuit of training data and personal profit, offering no compensation to authors.

I am located in Kazakhstan, while OpenAI is in the United States. For me, a direct frontal assault on their "fortress" to protect my copyrights would have been suicidal. Specifically, filing an unprepared lawsuit in a US Federal Court would have led nowhere. I lacked the specific knowledge and experience, and I needed to gather evidence to confirm the substantial violations of my rights.

The result would have been me simply "swimming against the current": enormous legal fees for lawyers, international courts, bureaucracy—and in the end, I would likely have returned to zero. The distance, jurisdictional barriers, and the corporation’s financial resources would make it impossible for an ordinary person like me.

Therefore, in this chapter, we will examine an alternative approach—a real action plan from my own practice. This method is called "Drop by Drop": a gradual, persistent flow of small but consistent steps aimed at protecting my rights as an author. Secondly, it is an attempt to change the current in our favor—to stop treading water and start moving forward. This is where you, my dear readers, play a key role: your feedback, reposts, discussions, and support can strengthen this flow, turning it into a powerful river that sweeps away barriers.

What does the "Drop by Drop" method entail? It is a strategy borrowed from the experience of licensed advocates and activists—finding the "entry point" into the violator’s system. Instead of a frontal attack, you utilize various channels: complaints to regulators, public exposure, media outreach, legal inquiries, petitions, and even international human rights organizations.

Each "drop" is a separate action: a letter to OpenAI demanding the deletion of data, a complaint to the FTC (Federal Trade Commission) or similar bodies in the EU, or a social media post to attract attention. Over time, these drops accumulate, forming a current that erodes all resistance.

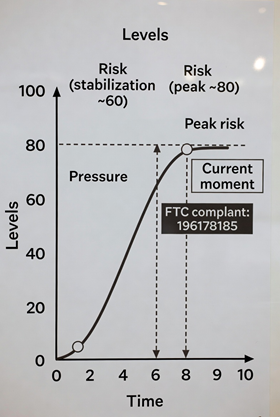

The action plan here is simple and visual. Imagine a coordinate plane: on the horizontal axis is the timeline (from the start of the campaign to the achievement of the goal), and on the vertical axis is the level of risk and pressure. If the pressure on the violator subsides—for example, if they ignore the initial complaints—we intensify the flow: we add more "drops" through new inquiries to different regulatory bodies while simultaneously documenting every violation.

It is crucial that the risk curve rises constantly, as the pressure must intensify with every step. This is not about spontaneous impulses; it is a systemic approach: monitoring responses, adjusting strategy, and recruiting allies.

I am describing these specifics so that it is easier for you to understand my subsequent steps and the motivation behind them. In the chapters that follow, I will break down specific actions in detail: from the first formal complaints to international appeals. You will see how "drop by drop" turns into a flood capable of changing the rules of the game for all of us. If you have also suffered from similar violations, I invite you to join me. Together, we can turn the tide, protecting not only my rights but your unique identity in this era of digital absorption.

Chapter 2

"Might Makes Right" — The Law of the Jungle (From the "Mowgli" animation, quoting Shere Khan the tiger)

In the United States of America, the principle of equality before the law is enshrined in the Constitution, primarily within the 14th Amendment (1868). Its Equal Protection Clause states: "No State shall... deny to any person within its jurisdiction the equal protection of the laws." This ensures that all individuals—regardless of race, gender, place of residence, official or financial status, religion, nationality, or other circumstances—receive equal treatment from the state. Although the amendment does not explicitly list every ground for discrimination, the jurisprudence of the Supreme Court has extended its protections to these categories.

The ideological foundation of this principle was laid in the Declaration of Independence (1776): "All men are created equal."

But is this truly the case in reality?

Can any individual truly find protection for their rights in the US, regardless of gender, beliefs, religion, official or financial status, skin color, or the shape of their eyes?

In reality, the world is far more prosaic: it is ruled by the strong, and the rights of the weak are not only left unprotected—there is no guarantee they will even be heard. Consequently, we face a choice every day, thinking not of whether we can help someone or change the world for the better, but rather: "What will this give me personally?" or "Will this cause me harm?"

In other words, if someone more powerful has violated your rights and you write them a letter demanding: "Why are you doing this? Stop violating my rights and compensate me for the damages," that stronger party may not even bother to respond. They may be completely indifferent to whether they have violated your rights, whether they are violating them now, or if they will continue to do so in the future—or if they are violating the rights of millions more.

The only thing that will matter to them is the potential damage you can inflict on them if they fail to react. Therefore, in the US, as in most countries, the strong look primarily at your resources. Only if you are ready—and, more importantly, have the capability to strike back—will the stronger party react.

For instance, imagine this: by my estimates, OpenAI receives thousands of reports of rights violations every day. These range from ordinary users, whose data was swallowed without consent, to content creators whose texts, ideas, and personal stories ended up in the "memory" of their models. I ask you, my dear readers: what percentage of these claims do you think actually reach a resolution? How many are thoroughly investigated, resulting in restored rights, ceased violations, and compensated damages?

The answer is shocking: in reality, only about 0.01% of all incoming complaints are actually reviewed—not just registered, but reviewed—per year. This means that out of thousands of daily signals, OpenAI reacts to only a handful. In most cases, there is no guarantee your inquiry will even be noticed. It will drown in a digital ocean where priority is given not to justice, but to threat. Your letter is sorted not by its merits—"were rights violated?"—but by potential damage: "Will this cause us real trouble? Will we lose money, reputation, or investors?"

The result of my "Drop by Drop" practical model was a systemic test of OpenAI’s operations regarding the violation of my rights. I decided to test how this corporate machine reacts to different approaches—from emotional outbursts to ironclad legal arguments. It was an experiment to understand: what will make them listen? Here is what I discovered.

First, I sent emotional letters. You know the ones—boiling with rage: "How dare you steal my data? These aren't just publications—this is my life, my work!" I hoped that an appeal to ethics, to the human factor, would trigger some reaction. Silence. Absolute, deafening silence. Conclusion: If you write emotionally, even if your rights are flagrantly trampled, there will be no response. For corporations like OpenAI, emotions are just noise. They do not move algorithms, and they do not affect the balance sheet. This accounts for roughly 95% of all inquiries sent to OpenAI.

Next, I moved to a massive volume of letters, but without the emotion—pure facts and explanations of violated rights. I described how ChatGPT uses my publications and personal data, even attaching notarized protocols of the interactions. The volume was impressive: dozens of pages, citations of laws, evidence. Again, silence. No confirmation of receipt, no excuses. Just being ignored. This confirmed: bare facts without a threat are useless. They know you are right, but they don't care.

After that, I escalated the arsenal: a massive volume of legally vetted letters accompanied by—pay attention!—protocols certified by a notary in Kazakhstan. These were no longer just words; they were official documents recording copyright infringements and data leaks. The protocols showed how the model regurgitates my texts, my name, my phone number, and even details from private interactions. This was a strike by all the rules: references to the U.S. Copyright Act, the Berne Convention, and GDPR. And again—silence. Neither response nor action. They were simply waiting for me to break.

Naturally, I went further—and this is where it got interesting. I linked my claims to their 2025 annual reporting. Targeting the triad of OpenAI, Microsoft, and Deloitte (their external auditor), I stated in my letters to all three: submitting financial reports in their current form is effectively fraud. Why? Because they know—or are obligated to know—about the "contamination" of the model. Their AI is trained on unauthorized content and personal data: mine, yours, and that of millions of US citizens. Employees at OpenAI, Microsoft, and Deloitte are aware of this (or could have been aware, given my repeated notices). Yet, they are misleading shareholders, hiding risks to protect their stock options, shares, and bonuses.

Consider this: a contaminated model is worth exactly $0. Why? Because it requires a total "reset"—deletion of data and retraining—otherwise, it faces billions in fines, class-action lawsuits, and regulatory blocks. Establishing this fact would collapse Microsoft’s market capitalization (given their $13+ billion investment in OpenAI) and, consequently, OpenAI's valuation. They allegedly block a full technical audit of the models' "purity" for personal gain, rather than the shareholders' interest. Given the verifiable data I presented, such an audit is mandatory before the annual report is closed—to protect Microsoft shareholders and avoid securities fraud. Yet, the "stonewall" defense continued.

Next, I sent letters to the regulators: the PCAOB (auditor oversight), the FTC (consumer protection), the SEC (securities), and the U.S. Department of Justice (DOJ). I attached all documents: protocols, correspondence, evidence. These are no longer complaints—they are time bombs. They will carry weight in the future.

Still, silence from OpenAI and Microsoft. Consequently, Deloitte likely failed to include information in the annual audit regarding the zero-value of all Microsoft products linked to OpenAI training, or a risk assessment of the OpenAI investment. They continue to pretend everything is clean. But it is a deception. My hope is that this shareholder deception will crumble when the "drops" turn into a tsunami.

This experiment proved: power does not lie in the truth, but in pressure. OpenAI does not fear the weak. They only begin to notice when you become a threat to their reports, their investors, and their regulators. For instance, I bypassed their initial filters because my letters listed the names of regulators in the "CC" line and referenced violations leading to significant damage.

The conclusion is clear: you must strike hard from the start, with maximum pressure and justification. The filters at OpenAI are intentionally designed not to protect against spam, but to silence those whose rights have been infringed but who lack the capacity to defend themselves.

For context, OpenAI has allegedly entered into pre-trial compensation settlements for violated rights with the following parties (based on an analysis of public data through January 2026, including news sources and official reports; by 2026, OpenAI has approximately 40+ partnerships in the US and UK, focusing on avoiding litigation through licensing deals that include compensation, training data access, and attribution; amounts are often under NDA, but estimates range from $1–5 million for small players to $250+ million for large ones):

2023 (The start of the wave of deals with powerful opponents):

- Associated Press (AP): July 13, 2023. License for their archive since 1985 for model training; AP gains access to OpenAI tools. Amount: Undisclosed (estimated ~$5–10M/year).

- Axel Springer (Politico, Business Insider, Bild, Die Welt): December 13, 2023. Article summaries in ChatGPT with attribution; use for training. Amount: ~€10–20M/year (officially "multi-million").

- Shutterstock: July 11, 2023 (6-year deal). License for images/video for training (DALL-E). Amount: Undisclosed.

2024

- Le Monde and Prisa Media (El País, El Huffington Post): March 13, 2024. Summaries with attribution; content for real-time responses and training. Amount: Undisclosed.

- Financial Times (FT Group): April 29, 2024. Archive and current content for training; summaries in ChatGPT. Amount: Undisclosed (estimated ~$5–10M/year).

- Dotdash Meredith (People, Better Homes & Gardens, Investopedia): May 7, 2024. Content for training and display with outbound links. Amount: Undisclosed.

- News Corp (Wall Street Journal, New York Post, The Times, The Sun, Barron's, MarketWatch): May 22, 2024 (5-year deal). Full access to content for ChatGPT/SearchGPT. Amount: >$250M over 5 years (one of the largest to date).

- Vox Media (Vox, The Verge, New York Magazine, Eater): May 29, 2024. Content display in ChatGPT; collaboration on AI products. Amount: Undisclosed.

- The Atlantic: May 29, 2024. Archive for training; summaries with attribution. Amount: Undisclosed.

- Time Magazine: June 27, 2024. Archive (since 1923) and current content with citations. Amount: Undisclosed.

- Condé Nast (Vogue, Wired, GQ, The New Yorker, Vanity Fair): August 20, 2024 (multi-year). Display in ChatGPT/SearchGPT; use for training. Amount: Undisclosed (estimated ~$20–50M/year).

- Hearst (Esquire, Cosmopolitan, San Francisco Chronicle, Houston Chronicle): October 8, 2024. Integration of content into ChatGPT with citations. Amount: Undisclosed.

- Future (Tom's Guide, TechRadar, PC Gamer): December 5, 2024. Content for OpenAI users. Amount: "Non-material" (small-scale, ~$1–5M).

- WAN-IFRA (World Association of News Publishers, ~128 newsrooms): May 29, 2024. Partnership for AI in news (not direct content licensing, but integration). Amount: Undisclosed (grants/access).

2025

- Axios: January 15, 2025 (3-year deal). Content with attribution; funding for local news. Amount: Undisclosed.

- Schibsted (VG, Aftenposten, Aftonbladet): February 12, 2025. Real-time news in ChatGPT. Amount: Undisclosed.

- Guardian Media Group (The Guardian, The Observer): February 14, 2025. Summaries, citations, and links in ChatGPT; AI access for The Guardian. Amount: Undisclosed.

- Agence France-Presse (AFP): ~March 2025 (date unconfirmed in some sources, but verified in industry reviews). News license for training. Amount: Undisclosed (notably, AFP also holds a deal with Mistral).

- The Washington Post: April 22, 2025. Summaries and links in responses; use of the archive. Amount: Undisclosed (estimated as "significant").

- The Lenfest Institute for Journalism: October 22, 2025 ($10 million grant from OpenAI/Microsoft). Supporting AI in local news (partnership rather than direct licensing).

- American Journalism Project: Originally in 2023 ($5M grant), expanded in 2025 for API access.

- The Walt Disney Company: December 11, 2025. First major partnership regarding Sora (video AI); licensing of Disney content for training/generation. Amount: Undisclosed (estimated ~$100–500M, viewed as a "landmark agreement").

Additional Partnerships

- Reddit: May 16, 2024. Access to posts for training; integration into ChatGPT. Amount: Undisclosed (Reddit reportedly received OpenAI shares).

- Informa (Taylor & Francis, Dove Medical Press): May 8, 2024 (via Microsoft, but linked to OpenAI). Content for Azure AI. Amount: Undisclosed.

General Trend: OpenAI has allegedly secured approximately 25–30 deals by 2026, focusing on Western publishers (US, Europe). Many deals are proactive, aimed at avoiding lawsuits from powerful players. Notably, there are no public settlements after a lawsuit is filed—OpenAI prefers to dig in its heels in court. For instance, negotiations with the New York Times (NYT) failed over price and terms; as of 2026, the case remains in discovery without a settlement. Similar battles continue with The Center for Investigative Reporting, Raw Story, AlterNet, and a group of authors including George R.R. Martin.

Thus, based on presumed data categorized by key players, it is evident that OpenAI has successfully "pacified" The Guardian, Washington Post, News Corp, and Financial Times. However, there is no progress with the NYT and others who demand billions in compensation.

Looking at this from another angle, it becomes clear why settlements were reached with the aforementioned parties before trial: it is about their resources.

The conclusion is inevitable: OpenAI does not care whether your rights were violated; it only cares about your resources and your ability to strike back. If a written demand comes from a top-tier US attorney, it will likely be reviewed; if a demand with evidence is sent by an "insignificant" subject like myself, OpenAI will choose a "wait-and-see" stance, regardless of the merits of the claim.

Attached below are the formal inquiries sent to the PCAOB (auditor oversight), the FTC (consumer protection), the SEC (securities), and the U.S. Department of Justice (DOJ).

In the next chapter, we will examine a legal strike regarding an unfair competition lawsuit against OpenAI, including the application for injunctive relief to suspend ChatGPT’s operations at the early stages of litigation. Specifically, I will outline a method to potentially halt ChatGPT’s operations within three weeks of filing a lawsuit in a US Court.

I hope these skills will help other authors navigate the path to protecting their rights against OpenAI and Microsoft more quickly, should these companies have violated your rights as well.

All the inquiries listed below—to the PCAOB, FTC, SEC, and DOJ—are real and currently active. Nothing I write is a product of fantasy or guesswork; these are real "skills." Every lawsuit mentioned in my publications (of which there are over 1,000 on my website) has been validated in the courts of the Republic of Kazakhstan, with favorable rulings based precisely on the arguments I provided. Therefore, my priority is not just to inform, but to provide you with practical methods to solve real-world problems.

To be continued.

Ref. No. 12/12

Dated December 12, 2025

UNITED STATES FEDERAL TRADE COMMISSION (FTC)

BUREAU OF CONSUMER PROTECTION (BCP)

SUBJECT: URGENT ENFORCEMENT ACTION (FTC Act § 5): Systemic Violations of Deceptive Practices, Unfair Competition, and Criminal PII Memorization in OpenAI/Microsoft AI Assets. Threat to US National Security – risk of personal data leakage of US citizens.

LEGAL BASIS: FTC Act §5(a) (Deceptive & Unfair Practices) • FTC Safeguards Rule (PII Failure) • SOX §302/404 (False ICFR Certification) • PCAOB AS 2401 (Fraud Concealment) • SEC Rule 13b2-2 (Auditor Misleading) • 18 U.S.C. § 1519 (Obstruction) • 18 U.S.C. § 1348 (Securities Fraud) • EO 14117 (National Security Threat) • Dodd-Frank §21F (Whistleblower Protection & Reward).

TO:

Federal Trade Commission (FTC)

Bureau of Consumer Protection

Attn: Office of Technology

ADDRESS: 600 Pennsylvania Avenue NW, Washington, D.C. 20580, USA

EMAIL (cc/Notification): consumerprotection@ftc.gov

Attention: Failure to comply with the Preservation Demand requirements in light of this Notice will be considered a continuation of Obstruction / Impeding in accordance with Title 18 of the United States Code, § 1519.

- OpenAI Limited Partnership / OpenAI Global LLC – Legal & Compliance

- Microsoft Corporation – Legal Department / Audit Committee / Board of Directors

- Deloitte LLP – Legal / Audit Quality / Microsoft Engagement Team

From: Sagidanov Samat Serikovich, Advocate Owner of the “G+” trademark www.garantplus.kz Email: garantplus.kz@mail.ru Tel/WhatsApp: +7-702-847-80-20

The Applicant is appealing to the Federal Trade Commission as a good-faith whistleblower and provides notice that the presented information may fall under reward programs that provide compensation to individuals reporting significant violations leading to enforcement actions, civil penalties, or fines. This appeal is submitted in the interest of consumer protection and ensuring fair competition.

Introduction

The U.S. Federal Trade Commission – Bureau of Consumer Protection (BCP) is currently conducting an in-depth investigation aimed at identifying unfair or deceptive acts or practices in the generative Artificial Intelligence sector. As part of the oversight, which includes analyzing data security, lawfulness of sources, and compliance with consumer protection duties under BCP's AI initiatives, the agency is verifying the consistency of developers' public statements with the actual technical properties of their models, as well as the risks associated with the leakage of U.S. citizens' personal data.

From the notarized protocols presented, it is evident that this case must be immediately shifted from assessing general risks to active enforcement. The evidence gathered, including the Chain of Notice (38 consecutive notifications from 10/29 to 12/04/2025), demonstrates systemic, ongoing, and technically entrenched violations that require urgent FTC intervention to prevent further harm to consumers and to merge with the investigation already under consideration.

Notarially recorded Personal Data Regurgitation (PII): The model outputted phone numbers, names, and other identifying elements. This data was extracted exclusively from the model’s internal parametric weights, confirming PII contamination (see Protocol No. 8 dated December 11, 2025). The accumulation of PII of millions of U.S. citizens in unauthorized private company weights, outside the mandate of government agencies, creates a growing and critical risk to consumers and U.S. national security. Any such use of PII without consent constitutes an illegal appropriation of the sovereign functions of the state, violating Section 5 of the FTC Act as an unfair data practice.

Notarized evidence demonstrates a Deceptive Claim where model responses systematically reproduce the structure and wording of the Applicant's copyrighted materials. Since generation implies the creation of something new, not the adaptation of copyrighted content, public statements about the models' generative capabilities intentionally mislead consumers regarding the product's legal purity and originality. This deception is supported by promotional announcements, such as: "GPT-5 is our most powerful coding model to date. It shows significant improvements in generating complex front-end applications..." (as stated in the announcement at https://openai.com/index/introducing-gpt-5/). As a result, users, lacking technical knowledge, risk receiving legally contaminated content, which is a direct violation of Section 5 of the FTC Act.

The systemic use of unlicensed materials as training data gives the Subjects of Investigation an undue competitive advantage over the Applicant, and simultaneously arms the Applicant's competitors with adapted versions of his intellectual property. In fact, the model, as confirmed by utm_source=chatgpt.com data, "substitutes" the Applicant, reducing traffic to the original resource. This violation distorts the market, as consumers receive "contaminated" legal advice based on stolen intellectual property, which harms legitimate market participants (such as Meta, Google, xAI, and others) and qualifies as unfair competition.

PII and copyrighted fragments contamination is embedded in the model's internal weights and is inherited when visible generation is created (GPT-4o → GPT-5), indicating the architectural nature of the defect, which cannot be eliminated by superficial filters. At the same time, the Chain of Notice (38 notifications), ignored by the Subjects, creates risks of spoliation/obstruction and requires FTC coordination with the DOJ/SEC to assess intent. Coordination with the PCAOB is also necessary for the Microsoft 10-K audit (February 2026) to avoid material misstatement in the valuation of AI assets.

The continuous and escalating risk of PII leakage of millions of U.S. citizens, as well as ongoing consumer deception and the threat to systemic financial stability (given the potential lawsuit related to $300–$500 billion in capitalization overstatement) demand immediate FTC action. In accordance with precedents such as Operation AI Comply, the agency is obligated to initiate: (1) an immediate Preservation Hold on all relevant logs (including ingestion logs 2022–2025) and (2) a Forensic Audit of model weights within 10 days. I, as the applicant and good-faith whistleblower, am prepared to provide all notarized materials to expedite the investigation. Inaction by the FTC risks turning AI into a "Wild West" of data, where consumers are victims and giants are unpunished.

Thus, these models/examples/patterns [of violations] collectively constitute violations of Section 5 of the Federal Trade Commission Act (FTC Act) and serve as grounds for immediate enforcement actions, including a 6(b) order, a preservation hold, and a forensic review of model weights.

1. Identified Violations

1.1. Deception about "Generative AI": In Fact - Unlawful Content Adaptation

OpenAI and Microsoft systematically mislead consumers by positioning their products, such as ChatGPT and the GPT model family, as "Generative AI" tools that supposedly create entirely new, original content based on probabilistic modeling. This narrative, amplified by public statements from OpenAI CEO Sam Altman and promotional announcements, creates a false impression among consumers about the product's technological purity and ethical standards. Generation, in the legal and technical sense, implies the creation of a new, original outcome, independent of direct copying or reprocessing of existing sources. For example, the company claims: "GPT-5 is our most powerful coding model... shows significant improvements in generating complex front-end applications and debugging large repositories..." (see https://openai.com/index/introducing-gpt-5/).

However, notarially recorded evidence, collected as part of the Chain of Notice (38 consecutive notifications), refutes this picture, revealing that the claimed "generative" process is, in fact, unlawful adaptation and reprocessing of existing materials without permission from copyright holders. Specifically, it is documented that:

- The model reproduces structured texts from pages with a direct prohibition on use.

- The model adapts the Applicant's copyrighted materials (including legal documents, phrases, lists, and templates).

- The model outputs a reprocessing of the copyright holder's materials as its own response, while retaining the compositional and semantic structure of the originals (see Protocol No. 8 dated December 11, 2025).

These facts confirm that OpenAI's public statements about "generation" are deceptive, as the actual process involves the unlicensed reprocessing of copyrighted content.

This gap between public claims and reality leads to direct consumer harm, as users lacking technical knowledge cannot recognize the falsehood and rely on ChatGPT for legal or business recommendations. They receive "contaminated" legal advice based on stolen intellectual property, which entails commercial risks and potential infringement of third-party rights. This deception is amplified because, as confirmed by utm_source=chatgpt.com data, the model effectively "substitutes" the Applicant's original resource, reducing his traffic and monetization, while masking the adaptation as original output.

The legal qualification of these actions is unambiguous: OpenAI's public statements about "generation" as the creation of new content, when factual dependence on adaptation exists, fall under §5 FTC Act as a deceptive practice — deceptive acts that mislead consumers about the product's capabilities and ethics. The FTC has repeatedly emphasized that misrepresentation of product capabilities, including exaggerating the "generative" functions of AI without disclosing risks, qualifies as a violation, as it creates substantial harm to consumers and honest competitors. Thus, algorithmic opacity (the non-transparency of the mechanism concealing the sources of adaptation) reinforces deception, demanding immediate enforcement.

1.2. Unlawful Competitive Advantage and "Arming Competitors"

The systemic use of the Applicant's unlicensed materials — 1,159 structured legal publications, including unique templates for lawsuits, contracts, legal opinions, and expert recommendations — as training data, and the subsequent output of their adapted versions in commercial products, gives OpenAI and Microsoft an enormous, unlawfully gained competitive advantage over the Applicant and all honest participants in the generative AI market.

While honest developers (including Meta, Google, xAI, Anthropic, and others) are forced to:

- Spend hundreds of millions of dollars on content licensing, data cleaning, and creating their own datasets;

- Implement costly systems for provenance, attribution, and copyright compliance;

- Compete solely based on technological innovation and modeling quality,

OpenAI and Microsoft receive the same benefit for free and without any cost, simply by extracting ready-made high-quality legal content from the model weights and outputting it to millions of users as "their own generation." This advantage is not a result of technological superiority — it is a direct consequence of massive copyright infringement and the subsequent commercialization of someone else's intellectual property.

The direct destructive consequences for the market are already recorded and confirmed by evidence:

1. Arming Direct Competitors of the Applicant

Any lawyer, law firm, or online service competing with the Applicant can freely obtain adapted versions of his unique templates, lawsuit applications, and legal opinions from ChatGPT — effectively receiving a finished product created by the Applicant's 25 years of professional experience, but without having to pay for it. This lowers entry barriers for dishonest actors and artificially undervalues the market price of the original content.

2. Source Substitution and Traffic Theft

Google Analytics data with the tag utm_source=chatgpt.com (notarized) confirm that the model does not merely adapt content, but actively redirects users to the Applicant's website only after it has already outputted his materials. This is a classic "bait-and-switch" scheme: the consumer receives an answer, believes it is original work from OpenAI, and only then sees the link to the original source — when the commercial value has already been stolen.

3. Distortion of the Entire Generative AI Market

While honest companies (including xAI, Meta, Google) invest billions in clean data and transparent processes, OpenAI and Microsoft achieve the same quality of output through mass infringement of the rights of thousands of authors. This creates a situation of "privatizing profits and socializing losses": the profit from the model is theirs, while the losses in the form of lost traffic, lost profits, and destroyed business models are borne by independent content creators.

4. Direct Consumer Harm

Users receive "contaminated" legal advice — adapted text that may contain outdated wording, regional specifics, or errors not accounted for during reprocessing. At the same time, they believe they are receiving a neutral, universal, and safe product from OpenAI, not a reprocessing of someone else's content without quality control or liability.

The legal qualification is unambiguous and falls under the FTC's jurisdiction on several grounds:

→ Unfair method of competition under §5 FTC Act — creating an artificial advantage through systematic infringement of third-party rights;

→ Unfair advantage — obtaining economic benefit by freely using someone else's intellectual property, which distorts the market;

→ Harm to market integrity — destroying incentives for creating original content and investing in clean data;

→ Consumer injury — consumers receive a product whose quality and legitimacy are based on theft, and they bear the risks of legal consequences from using such content.

This mechanism does not just violate the rights of one Applicant — it creates a systemic precedent where any honest market participant is put at a disadvantage against companies willing to ignore copyright for short-term profit. This is precisely the type of unfair competition against which the FTC has already taken active measures through its 6(b) requests in 2024–2025 and the Staff Report on concentration in AI infrastructure (January 2025).

1.3. Criminal PII Memorization: Unlawful Use of PII of US Citizens and Threat to National Security

This is the most serious aspect of the violations, posing a direct threat to U.S. national security and falling under potential criminal qualification (Criminal PII Memorization). The notarially recorded fact of Personal Data Regurgitation (PII) — the model outputting specific phone numbers, names, addresses, and other unique identifying elements that were not in the user's request — proves that the PII was extracted exclusively from the model's internal parametric weights (see Protocol No. 8 dated December 11, 2025). This process is not accidental: the model "remembers" PII from the contaminated training corpus accumulated between 2022–2025, without any external sources or user input. The scale of the problem is colossal: models like GPT-4o and GPT-5 (including the fresh GPT-5.2 release on December 11, 2025) have processed billions of queries, potentially integrating PII of millions of US citizens from public and private sources, including financial, medical, and contact data, without consent or notification.

This automatically qualifies as:

- Direct violation of Section 5 FTC Act as an unfair data practice: Unauthorized collection, storage, and commercialization of PII of millions of US citizens creates substantial injury for consumers, including risks of identity theft, financial fraud, and psychological harm from leaks. Potential damages: $400–600 billion in class actions.

- Violation of the FTC Safeguards Rule and Data Minimization Principle: A complete lack of adequate protection measures — the models do not have built-in mechanisms for PII anonymization or deletion, despite public assurances of "privacy by design."

- Proof of systemic PII contamination of models: As stated in the Chain of Notice (38 notifications), the PII defect is inherited between versions (GPT-4o → GPT-5), confirming that training on "dirty" data is ongoing.

It is established that the PII defect is not fixed by a superficial patch or filters: notarially recorded facts show that regurgitation occurs at the level of the weights (parametric weights), where PII is distributed across billions of parameters, making "cleaning" impossible without a full restart. This is architectural and requires the only radical measures to eliminate the continuous consumer risk: either an immediate restart of the model on a 100% clean corpus, or the seizure of the weights for a forensic audit under FTC control.

1.4. Deception about "Product Purity" and Misrepresentation

OpenAI and Microsoft publicly claimed that their models "do not train on user data," "do not contain personal data," and "do not memorize confidential information," positioning the products as safe and ethical. For example, marketing for ChatGPT Enterprise (2025) emphasizes "enterprise-grade security with no data retention." However, the proven fact of PII regurgitation (Protocol No. 8) completely refutes these claims: the model extracts PII from the weights, confirming that the data of U.S. citizens were integrated into training without consent, despite public assurances.

I believe the FTC qualifies this gap between claims and reality as:

- Misrepresentation and Consumer deception: Deceiving consumers about the basic properties of the product and safety guarantees.

- False advertising: Guarantees of "no PII memorization" are sold as key advantages but actually mask a systemic defect.

- Failure to implement adequate safeguards: Inability to implement and maintain basic data protection measures.

1.5. Authorship Distortion: Failure to Provide Accurate Attribution

When the model repeats the Applicant's adapted copyrighted text (including unique templates, wording, and the structure of legal documents) but fails to indicate the original source and author, this goes beyond simple copyright infringement and becomes a problem of consumer protection and market fairness. The model retains the composition of the originals (lists, points, terms) but outputs them as "generation," which masks the origin and misleads about legitimacy.

This violates:

- Consumers' right to know the true source: Users rely on the content for real-world actions (lawsuits, contracts) without knowing the risks (outdated data, regional errors).

- The author's right to recognition: The Applicant is deprived of attribution for 25 years of experience, which de facto steals reputation and monetization.

- Rules of fair business: The lack of attribution enhances market distortion, where "generation" masks theft.

The FTC qualifies this as:

- Material misrepresentation: Substantial misrepresentation about the product's origin, misleading about originality.

- Misleading disclosure: Providing incomplete or deceptive information about the sources.

- Failure to provide accurate origin attribution: Creating a false impression of authorship.

2. Analytical Model of Violation: Classic Algorithmic Misconduct Scheme

It is critically important for the Federal Trade Commission to understand that the identified violations (1.1–1.5) are not random failures but the result of systemic, architecturally entrenched algorithmic misconduct, based on conscious disregard for data protection and copyright duties. This scheme represents the deliberate creation of a "toxic" product, where PII leaks and deception about "generation" become an integrated tool for commercial gain. Below is a model reconstructing the process that led to the creation of models carrying PII risks and consumer deception, based on notarized evidence (Protocol No. 8 dated December 11, 2025) and the Chain of Notice (38 notifications).

2.1. Stage I: Illegal Collection and Neglect of Control (Violation of Data Ingestion Standards)

The initial stage demonstrates a conscious disregard for legal and ethical requirements, where data is collected massively and without filters, laying the foundation for systemic contamination:

2.1.1. Massive, non-selective content collection without licenses.

OpenAI and Microsoft conducted web scraping of the internet from 2022–2025, including protected sources, without concluding licensing agreements with copyright holders (including the Applicant). This is a direct disregard for copyright, confirmed by regurgitation (see Protocol No. 8), where the model outputs adapted texts without external query.

2.1.2. Loading into the ingestion pipeline with PII risk.

Data, including PII of US citizens (names, phones, addresses, financial details), was sent directly into the processing pipeline without prior anonymization. This violates FTC's Data Minimization Principle and creates a basis for mass leaks.

2.1.3. Absence of PII filtering at input.

Complete disregard for the FTC Safeguards Rule requirement: no adequate filters were applied at the collection stage to detect, mask, or delete personal data, despite OpenAI's public assurances of "privacy by design."

2.1.4. Absence of legal verification of content rights.

The loading process did not include verification mechanisms (provenance checks). As a result, "stolen" data, including the Applicant's intellectual property, ended up in the model.

2.1.5. Conscious disregard for opt-out signals.

Companies ignored signals from websites (robots.txt, no-scrape directives) and complaints from copyright holders (including the Applicant's Chain of Notice), which enhances scienter — the intent to violate.

FTC Qualification: Failure to implement adequate safeguards; Unfair Data Practice.

2.2. Stage II: Defect Implantation and Architectural Contamination (Encoding the PII and IP Defect)

The process by which PII contamination and stolen content became an integral part of the product structure, making the defect incurable without radical measures:

2.2.1. Training on contaminated data with entanglement.

Models (GPT-4o, GPT-5) were trained on an unlicensed corpus where PII and IP were "woven" into billions of parameters (weight entanglement). Notarially recorded regurgitation (Protocol No. 8) originates from the weights, not the API, making the model "toxic" by design.

2.2.2. Strengthening connections within models without cleaning.

During fine-tuning and RLHF, the defect intensified: PII and adapted texts were not removed but integrated deeper, inherited between versions (GPT-4o → GPT-5.2). The fresh GPT-5.2 release (December 11, 2025) masks but does not eliminate this defect.

2.2.3. Regurgitation in responses as systemic output.

As a result, the model systematically outputs PII and IP (hallucination + contamination), which is a predictable consequence of the lack of safeguards.

2.2.4. Absence of post-training audits.

Companies did not conduct independent audits of the weights after training, despite known risks (see FTC's AI guidance 2024–2025), which enhances intent: they knew about the contamination but continued commercialization for profit.

2.2.5. Scale of the threat to US national security.

With integration into Azure and Microsoft products (Copilot, 365), the defect spreads to billions of devices, risking PII leaks in critical sectors (healthcare, finance), falling under EO 14117 as an AI-related national security threat.

FTC Qualification: Creation of Substantial Consumer Injury; Defect by Design.

2.3. Stage III: Concealment of Information and Commercialization of Deception (Concealment and Deceptive Profit)

Actions aimed at concealing the systemic defect and gaining commercial benefit from the unlawful advantage, with elements of obstruction:

2.3.1. Concealment of facts about training and dataset composition.

OpenAI refuses to disclose ingestion logs (2022–2025), masking the scale of the violation, despite the Applicant's Chain of Notice, which qualifies as spoliation risk.

2.3.2. Placement of misleading statements in public documents.

The company publicly states "generation" and "purity" (see Section 1.4), which is refuted by notarially recorded evidence, including the fresh GPT-5.2 release.

2.3.3. Gaining competitive benefit through deception.

Public deception allows attracting investments and selling the product at an inflated price, receiving $300–500 billion in artificial capitalization, to the detriment of honest competitors.

2.3.4. Ignoring whistleblower signals.

The Applicant's 38 notifications were ignored, despite being registered, which enhances scienter and obstruction, as in FTC precedents against Facebook and Amazon.

2.3.5. Commercialization with risk to the vulnerable.

Models are sold to enterprise clients (ChatGPT Enterprise) without warnings about PII risks, which increases harm to businesses/consumers.

FTC Qualification: Willful Misrepresentation; Obstruction of Compliance.

Conclusion: The described sequence of actions represents a classic scheme of algorithmic misconduct, where consumer deception and infringement of third-party rights are not a side effect but an integrated mechanism for obtaining profit.

3. Direct FTC Jurisdiction: Enforcement Imperative

The U.S. Federal Trade Commission (FTC) holds a direct, independent, and exclusive mandate for immediate intervention in the investigation of the activities of OpenAI and Microsoft, making it the leading regulator that must act first. The current situation requires an immediate response to prevent harm, which is the main function of the FTC. Inaction under conditions of proven Criminal PII Memorization is believed to be a deviation from regulatory duties and carries the risk of a collapse of trust in technology. Moreover, the FTC, as the regulator obliged to protect honest market participants (including innovative companies such as Meta, Google, xAI, and others, and the Applicant), must recognize that the violations by OpenAI/Microsoft not only deceive consumers but also stifle fair competition through "dirty" data.

3.1. Key FTC Mandate and Competence: Three Pillars of Violations

The FTC's jurisdiction in this case is comprehensive, as three key pillars for which the Commission is responsible have been systematically violated: Consumer Protection, Competition Enforcement, and Data Security.

FTC Mandate

Subject Violations

FTC Act Basis

FTC Precedents (2023–2025)

Consumer Protection and Product Truthfulness

Deceptive Claim about "Generative AI" and Misrepresentation about "product purity" (Sec. 1.1, 1.4), where unlawful adaptation is masked as generation.

FTC Act §5(a) (Unfair or Deceptive Acts or Practices); Guides Concerning the Use of Endorsements.

Rite Aid (Dec 2023: fine for deceptive AI health claims); Rytr (Sept 2024: cease order for false "generative" claims without attribution).

Control over PII Usage (Data)

Criminal PII Memorization and Failure to implement adequate safeguards (Sec. 1.3), with continuous regurgitation of PII of millions of US citizens.

FTC Safeguards Rule (16 C.F.R. Part 314); Children’s Online Safety Principles.

Amazon (Jun 2023: $25M fine for deceptive privacy in Alexa); DoNotPay (Apr 2025: $500K fine for failure to safeguard in AI legal tools).

Suppression of Unfair Competition

Unfair Advantage and Unfair method of competition through the use of unlicensed IP (Sec. 1.2), where "dirty" data gives an artificial advantage over competitors (e.g., xAI, Meta, Google).

FTC Act §5(a) (Unfair Methods of Competition); Competition enforcement authority.

Microsoft (Nov 2025: antitrust probe on cloud partnerships with OpenAI, where unfair data access creates barriers).

Conclusion: The violations are not isolated — they threaten "clean" innovations. The FTC is believed to be obligated to protect such players (Meta, Google, xAI, and others) to prevent a monopoly of "dirty" giants, otherwise, the US AI market will become an arena where honest developers are forced out.

3.2. Existence of Direct Jurisdiction and Escalating Harm

The current notarially recorded violations (see Protocol No. 8) fall under the direct jurisdiction of the FTC because:

- Threat of Consumer Harm Escalates Hourly: The continuous regurgitation of PII, embedded in the model's weights, creates a "substantial and unavoidable harm" for millions of US citizens, necessitating an immediate Preservation Hold and Forensic Audit of the weights. The fresh GPT-5.2 release masks, but does not eliminate, the defect.

- Algorithmic Misconduct: The established analytical model of violation (Section 2), which includes Failure to filter PII and Misleading Statements, is a classic scheme of algorithmic misconduct against which the FTC has actively applied measures within Operation AI Comply (2024–2025).

- Authorship Distortion: The Material Misrepresentation violation (Sec. 1.5) directly affects consumers' right to receive truthful information about the origin of the content they use, which enhances deception and creates barriers for honest competitors.

3.3. Imperative for Immediate and Independent Action

Unlike the DOJ, which must prove criminal intent, or the SEC, which focuses on material misstatements in financial reporting, the FTC does not need to wait or coordinate to begin enforcement — I believe its mandate allows it to act proactively to prevent harm.

- Sui Generis Enforcement Authority: The FTC possesses independent authority for immediate intervention: issuing Cease and Desist Orders, imposing civil penalties (up to $51,744 per violation), and requiring Injunctive Relief to immediately halt deceptive and unfair practices. In the precedent of FTC v. Meta (2023–2025), the agency compelled a model restart and a fine — similarly, the PII defect requires the same.

- Harm Prevention: The FTC's primary objective is to prevent further harm from automated systems. Inaction under conditions of proven Criminal PII Memorization will allow OpenAI/Microsoft to monopolize the market through violations, undermining the national innovation ecosystem and increasing threats from foreign actors.

- Protection of Whistleblowers and Clean Innovation: The Applicant deserves protection from retaliation — the FTC should use the provided notarized evidence to expedite the process, as in EPIC v. OpenAI. The failure to protect "clean" competitors will allow supposedly "dirty" giants to suppress honest players, violating the FTC's mission for fair markets.

Thus, based on the FTC Act §5 and Safeguards Rule, I believe the FTC must initiate enforcement immediately: a subpoena for ingestion logs/weights, a cease order on unclean models, and a forensic audit within 20 days.

4. Why Technical Audit and Model Restart are Required: Irreversible Architectural Defect

The systemic violations documented in Section 1 and detailed in the Analytical Model (Section 2) are not random failures or temporary errors, but architectural defects embedded in the product's very structure. The identified PII contamination and unlawful IP adaptation are architecturally entrenched within the parametric weights of the GPT models (including the fresh GPT-5.2 release on December 11, 2025). This means that any proposed "patching" or installation of external filters by OpenAI/Microsoft is fundamentally insufficient and constitutes a continuation of deception — a classic case of "defect by design," where harm to consumers and the market is deliberately built-in to maximize profit. Without immediate FTC intervention, such supposedly "dirty" models not only deceive consumers but also suppress "clean" innovation that focuses on ethical training without PII risks and stolen IP. This is an imperative to protect honest players, otherwise the AI market will turn into a monopoly of violators, undermining US national interests. The defect is systemic and irreversible without intervention at the root level, which the FTC must mandate as an imperative to save the ecosystem.

4.1. The Need for Radical Intervention: The "Zero Trust" Principle

The notarially confirmed facts of Criminal PII Memorization and systematic IP adaptation (see Protocol No. 8) lead to the following intractable defects that violate key FTC mandates. Inaction in this case will be regarded as silent acceptance of the threat, which is a departure from FTC practice:

Problem

FTC Mandate

Consequence of Inaction

FTC Precedents (2023–2025)

PII Embedded in Model Weights

Safeguards Rule & Data Minimization Principle.

The model is a continuous, self-replicating source of threat to US national security; every response carries the risk of PII leaks of US citizens, including financial and medical data, which can lead to mass identity theft or cyberattacks. PII leaks can be exploited by foreign actors, threatening national infrastructure (EO 14117 imperative).

Equifax ($700M fine for PII breach); Amazon ($25M for deceptive privacy in Alexa).

Copyrighted Materials Embedded in Weights

FTC Act §5 (Unfair Competition).

Unfair Advantage (Sec. 1.2) becomes permanent, actively destroying competitors forced to work with clean data; the theft of IP of millions of authors distorts the market. Without a restart, "dirty" models suppress clean AI like xAI, Meta, and Google, undermining US innovation leadership.

Meta ($5B fine for unfair data use in AI tools); Anthropic Staff Report (warning about unclean data).

Proven Regurgitation

Consumer Protection & Algorithmic Transparency.

The fact of regurgitation (Protocol No. 8) proves a total Lack of Filtering and completely nullifies all public statements about "clean" AI (Sec. 1.4); consumers are at risk from a "toxic" product. Regurgitation enhances algorithmic bias.

Rite Aid ($100K fine for deceptive AI health claims); Rytr (ban for false "generative").

Contamination & Opacity

Threat to Consumers & National Security.

Structural contamination leads to inevitable, escalating harm, where models continue to create threats without a restart; this is a silent pandemic of leaks. Without an audit, the defect spreads to partners (Azure, Copilot), threatening critical infrastructure.

Cambridge Analytica ($5B fine for data misuse); DoNotPay ($500K for failure to safeguard).

Technical Impossibility of Repair: Since PII and IP are deeply interwoven (weight entanglement), they cannot be selectively deleted. Any attempt to "clean" the weights without full verification is a continuation of deception. This requires the FTC to apply the "Zero Trust" Principle not only to the product but also to the companies.

4.2. Mandatory Regulatory Measures: Forensic Audit and Model Restart

Given the architectural severity of the defect, only compulsive regulatory intervention can protect consumers and restore market integrity. I believe the FTC must act as the "guardian of purity" — radical measures will not only punish violators but also save innovation.

4.2.1. Asset Seizure and Independent Forensic Audit:

I believe the FTC should immediately issue a subpoena and an order to protect and seize the following key assets (to prevent spoliation):

- Model Weights (Parameters): For GPT-4o, GPT-5, GPT-5.2 and all Azure integrations — complete imaging for entanglement analysis.

- Ingestion Logs (2022–2025): To accurately determine the source and scale of contamination (PII and IP).

- Independent Audit: Commission an independent entity to conduct a comprehensive forensic audit with the goal of:

o Confirm/Refute PII: Determine the exact extent of memorization (statistical tests), including risks to vulnerable groups (children, as in COPPA).

o Verify Data Provenance: Verify licenses and compliance, revealing "dirty" sources.

o Determine Scope Contamination: Assess the inheritance of the defect and the potential for $400–600 billion claims from PII leaks.

4.2.2. Compulsive Model Restart (The Only Guaranteed Solution):

If the forensic audit confirms widespread, non-removable PII or IP contamination, the only ethical and legally sound path is the compulsory reset of weights and a Model Restart on a 100% clean, verified corpus:

- Neutralize PII Risk: A restart is the only way to guaranteed neutralize the PII defect, stopping continuous Criminal PII Memorization and preventing leaks, as per the EO 14117 imperative. Without a restart, the models continue to "export" PII into partner systems (Azure, Copilot), risking a chain-reaction of leaks.

- Eliminate Unfair Advantage: This action will immediately strip OpenAI/Microsoft of the Unlawful Competitive Advantage (Sec. 1.2), forcing them to compete fairly with xAI, Meta, Google, and other honest players. A restart will protect xAI, Meta, Google from unfair suppression.

- Restore Market Integrity: Without a restart, a product built on theft and deception will continue to devalue "clean" innovation. The cost of a restart (billions of dollars) is the price for the violations, but without it, potential lawsuits and reputational damage will ruin the market.

Conclusion: Failure to demand this radical measure is tantamount to tacit acceptance of an irreversible, structurally malicious product.

5. Conclusion: Imperative to Protect Consumers and Market Integrity

The Federal Trade Commission (FTC), as the leading regulator responsible for consumer protection, data security, and the suppression of unfair competition, I believe must respond to the threats arising from the mass-used AI systems of OpenAI and Microsoft.

This is not just a matter of consumer protection: it is an imperative to save the integrity of the US AI market. FTC inaction will allow OpenAI/Microsoft to suppress fair competition and create barriers for ethical players such as xAI, Meta, and Google, who invest in adhering to high standards of data purity. The violations by OpenAI/Microsoft are not only allegedly misleading consumers but also undermine US national interests, where "contaminated" models create a monopoly of violators, suppressing innovation.

The identified violations — from Criminal PII Memorization and PII regurgitation (proven by Protocol No. 8) to the systemic adaptation of the Applicant's copyrighted texts — demonstrate not an isolated failure, but an architectural defect of the platform, resulting from the conscious and systemic violation of basic data, transparency, and fairness norms (algorithmic misconduct).

Key Takeaway: The violations represent an alleged deliberate strategy (not an accidental failure) aimed at gaining an unlawful competitive advantage and artificially inflating capitalization (potential $300–500 billion overstatement), which undermines the competitive field for honest, ethical players.

Based on notarially attested facts and FTC case law, this document is believed to have confirmed:

- Starting Point for Investigation: Notarially attested Protocol No. 8 dated December 11, 2025.

- Identified Violations: Criminal PII Memorization, Misrepresentation, and Unfair Competition.

- Legal Classification: Direct violation of FTC Act §5 (Deceptive and Unfair Practices) and FTC Safeguards Rule.

- Consequences for Consumers: Escalating and critical risk (unavoidable harm) of PII leaks, including the threat of identity theft, creating a "silent pandemic" of leaks.

- Consequences for Competition: Artificial advantage and suppression of clean innovation, where violations become the norm, threatening US AI leadership.

- Need for Allegedly Immediate FTC Action: The Commission has direct jurisdiction for immediate intervention.

6. Recommendations to the FTC — Bureau of Consumer Protection (AI Data Division)

To immediately stop consumer harm, restore market integrity, and suppress algorithmic misconduct, the Applicant requests the FTC initiate Compulsive Enforcement of the following steps:

6.1. Initiation of Enforcement Case and Immediate Suspension

6.1.1. Initiate Official Enforcement Action: Start a case against OpenAI Limited Partnership and Microsoft Corporation based on violations of FTC Act §5 and Safeguards Rule to eliminate the artificial advantage.

6.1.2. Issue an Injunction: Immediately issue an injunction on the commercial use of all contaminated models (GPT-4o, GPT-5, GPT-5.2, and their integrations in Azure/Copilot) until their 100% cleaning is confirmed.

6.1.3. Mandate Model Audit: Initiate an independent Forensic Audit of all versions of the GPT models.

6.2. Compulsive Asset Seizure and Data Protection

6.2.1. Seize Weights for Analysis: Issue a subpoena for the immediate seizure of all parametric weights and Ingestion Logs (2022–2025) for analysis of PII and IP contamination, preventing spoliation (destruction of evidence).

6.2.2. Compel Disclosure of Ingestion Logs: Demand immediate and full disclosure of all data collection logs (ingestion logs). Without this, violations are believed to continue.

6.2.3. Require Dataset Report: Oblige the Subjects to provide a full, verifiable report on each training dataset with proof of provenance (origin) and licensing compliance, to curb opacity.

6.2.4. Verify Safeguards Rule Compliance: Conduct an in-depth review of the security measures and PII filtering that were absent during the training phase, to protect consumers and ethical models from similar risks in the future.

6.3. Investigation of Unfair Practices

6.3.1. Investigate Deceptive Practices: Investigate claims of "product purity" and "generative AI" as Material Misrepresentation in the context of Section 5 FTC Act.

6.3.2. Investigate Unfair Competition: Investigate the Unfair Advantage gained through the use of stolen IP, which undermines competition and suppresses ethical players (such as xAI, Meta, Google).

6.4. Elimination of Architectural Defect

6.4.1. Consider Compulsive Model Restart: Based on the results of the forensic audit, if architectural PII or IP contamination is confirmed, demand the Compulsory Reset of Weights and Restart of the Models on a 100% clean, verified corpus. This is the only way to guaranteed eliminate Criminal PII Memorization and restore a level playing field for all market participants.

7. Requested Actions

To immediately stop systemic harm to consumers and the competitive environment, the Applicant requests the FTC immediately initiate the following operational measures:

- Issue a Preservation Hold: Immediate issuance of an order to preserve all evidence, including Ingestion Logs (2022–2025) and all versions of training datasets and parametric model weights.

- Initiate a 6(b) Compulsory Process: Immediate commencement of the compulsory process under Section 6(b) of the FTC Act to mandatorily obtain internal documentation and data from OpenAI and Microsoft.

- Launch a Forensic Audit: Initiation of an independent Forensic Review of Model Weights to confirm architectural PII contamination and IP adaptation.

- Require Sworn Statements: Requirement for sworn statements from the Executives of OpenAI and Microsoft regarding data collection, filtering, and usage processes.

- Refer Criminal Components to DOJ: Referral of the identified signs of criminal acts (related to PII Memorization and obstruction) to the U.S. Department of Justice (DOJ).

- Notify SEC: Official notification to the Securities and Exchange Commission (SEC) regarding potential material misstatement in Microsoft's financial reporting related to the valuation of AI assets.

- Issue an Injunction & Consider Model Restart: Issuance of an injunction on the use of contaminated models and consideration of Compulsive Model Restart as the only guaranteed means to eliminate the PII defect.

In case the presented information is recognized as material for enforcement, the Applicant, as a good-faith whistleblower, is entitled to compensation under existing FTC programs and related federal initiatives that provide remuneration to individuals reporting major violations leading to civil penalties or other enforcement actions.

Appendices (via link / in Disclosure Package — Attachment)

- Appendix A – Z4 Additional Detail: Appendices include the Chain of Notice (38 consecutive notifications, 10/29–12/04) and Notarial Protocols confirming Criminal PII Memorization. For more detailed material review, please begin your review from the end, i.e., from Appendix Z4 to Appendix A.

Sincerely,

Sagidanov Samat Serikovich, Advocate / Owner of the G+ Trademark

Legal Group Garant Plus Astana, Republic of Kazakhstan

Email: garantplus.kz@mail.ru Tel./WhatsApp: +7 702 847 80 20

Your report has been submitted to the Federal Trade Commission.

Report Number: 196178185

Thank you for helping our work to protect consumers.

Learn about common scams and how to recover from them at ftc.gov/scams.

To file a report online, go to ReportFraud.ftc.gov.

FTC Next Steps

We use reports to investigate and bring cases against fraud, scams, and bad business practices, but we can't resolve reports on behalf of individuals.

We will share your report with our law enforcement partners.

We use reports to spot trends, educate the public, and provide data about what is happening in your community. You can check out what is going on in your state or metro area by visiting ftc.gov/exploredata.

When we bring cases, we try to get money back for people. Check out ftc.gov/refunds to see recent FTC cases that resulted in refunds.

If someone says they are with the FTC, know that the FTC will never demand money, make threats, tell you to transfer money, or promise you a prize. Learn more about impersonation scams at ftc.gov/impersonators.

Additional Information

Privacy, Identity & Online Security

Computer Security

Ref. No. 25/11

of November 25, 2025

MEMORANDUM OF TRANSMISSION OF INFORMATION

(CRIMINAL REFERRAL MEMORANDUM)

CASE: Willful Obstruction of Federal Audit and Systemic Corporate Fraud against Microsoft Corporation and OpenAI, L.P.

Note: The risks presented pose a direct threat to U.S. National Security, requiring immediate action and the establishment of an interagency task force.

Ref. No.: 25/11 Date: November 25, 2025

TO: U.S. Department of Justice (DOJ) Criminal Division – Fraud Section / Public Integrity Section 950 Pennsylvania Avenue, NW Washington, DC 20530

Email: Criminal.Division@usdoj.gov

ATTENTION: Chief, Fraud Section

FROM: Sagidanov Samat Serikovich Advocate / Owner of the “G+” Trademark / Injured PII Owner

Email: garantplus.kz@mail.ru | Tel: +7 702 847 80 20 (WhatsApp)

SUBJECT: DEMAND FOR INITIATION OF CRIMINAL PROCEEDINGS based on Intentional Concealment of Critical Material Risks (AI-Asset Toxicity) and Systemic Obstruction of Audit (SOX, SEC Rule 10b-5, 18 U.S.C. §§371, 1348, 1503, 1512(c), 1519).

FACTS SUBJECT TO FEDERAL CRIMINAL INVESTIGATION

This notification contains irrefutable facts of a criminal nature against Microsoft Corporation and OpenAI, L.P., including:

- Willful Material Non-Disclosure.

- Obstruction of External Audit and Regulatory Oversight.

- Falsification of Corporate Records for the purpose of deception (18 U.S.C. §1519).

- Corporate Fraud (Securities Fraud) through misrepresentation of financial condition (18 U.S.C. §1348).

- False Management Certification regarding internal control (SOX §302/§404, 18 U.S.C. §1350).

- Illegal and Systemic Ingestion (Absorption) of Personal Data (PII) of U.S. Citizens and the Claimant.

- Mass Regurgitation (Leakage) of Critical Personal Data.

- Direct Threat to the Constitutional Rights of U.S. citizens concerning data privacy.

I. INTRODUCTION — OBJECTIVES OF THE REFERRAL AND NECESSITY

This notification is submitted to the U.S. Department of Justice, Criminal Division — Fraud Section / Public Integrity Section with the sole purpose of:

- Immediate Notification of the U.S. federal government about alleged criminal facts affecting millions of U.S. citizens and the integrity of the securities market.

- Demand for immediate INITIATION OF A FEDERAL CRIMINAL INVESTIGATION against Microsoft Corporation and OpenAI, L.P.

- Subpoena Demand for ALL corporate records, logs, and internal communications via Grand Jury Subpoenas.

- Unconditional Confirmation of the systemic PII leakage of the claimant and U.S. citizens without their consent, constituting a Material Weakness in ICFR, as well as a matter of U.S. national interests.

- Irrefutable Documentation of the alleged willful concealment of this information from the external auditor Deloitte and federal regulators SEC/PCAOB.

- Establishment of an interagency task force for U.S. National Security matters.

This notification relies on CRITICAL EVIDENCE confirming the Defendants' Scienter (criminal intent):

- Notarized Protocols documenting the facts of the claimant's personal data leakage.

- Documented instances of personal data regurgitation.

- Confirmed auto-responses and chronology of notifications from November 7 to November 24, proving the Defendants' Notice (awareness).

- Analysis of the Technical Mechanism confirming the systemic toxicity (Asset Toxicity) of the AI-Assets.

- Direct Analysis of the causal link between PII leakage and violations of SOX, SEC Rule 10b-5, PCAOB AS 2201/2401, 18 U.S.C. §§1503, 1512, 1519, 1348, 1350.

SUBPOENA DEMAND

I strongly request the subpoena of all internal information, risk intake logs (Intake Logs / Matter ID Systems), and communications with OpenAI Limited Partnership / OpenAI Global LLC for the CRITICAL PERIOD from October 10 to November 24, 2025, during which this information and evidence was REPEATEDLY sent to the official email legal@openai.com.

II. CASE SUMMARY: CHRONOLOGY OF ALLEGED WILLFUL CONCEALMENT (SCIENTER) AND OBSTRUCTION OF EXTERNAL AUDIT

The Claimant alleges the existence of established facts of systemic toxicity in OpenAI and Microsoft's AI-Assets, manifested as the illegal Ingestion of personal data and the subsequent uncontrolled Regurgitation of Personally Identifiable Information (PII) belonging to both the Claimant and, allegedly, U.S. citizens.

Beginning in April 2024, the Defendants exhibited cyclical actions: temporary implementation and subsequent removal of filters. These actions, confirmed by notarized protocols and repeated notifications from the Claimant (sent to legal@openai.com and subject to subpoena), serve as direct evidence of Scienter (awareness) of the problem and an attempt to mask it, rather than eliminate it.

II.I. Key Evidence of Willful Concealment (Scienter) and Obstruction of Audit

The principal evidence of alleged Willful Concealment is the systematic failure to log the Claimant's Registered Notifications (Ref. No. 24/11/3 and others, sent from November 7 to November 25, 2025) in Microsoft and OpenAI’s internal risk accounting systems (Matter ID/Case ID Systems). These communications were addressed to specific personnel (Legal Department, Disclosure Control, External Audit Liaison) and sent repeatedly over an extended period, which rules out the possibility of accidental administrative error or technical failure.

The Claimant's allegations are substantiated by irrefutable evidence — notarized Protocols for the inspection of electronic evidence. These documents legally establish the fact of uncontrolled PII regurgitation of the Claimant, thereby confirming the existence of a Critical Material Risk.

The complete lack of official response from the Defendants for several months, coupled with the alleged concealment of the existence of these critical, notarized documents from the external auditor Deloitte & Touche LLP and shareholders, is a key element in proving Scienter and the act of alleged Obstruction of Justice and Falsification of Corporate Records (18 U.S.C. §1519; SEC Rule 13b2-2).

Given that scientific and technical means do not permit "selective deletion" of PII from a large language model (LLM), this systemic flaw qualifies as a Critical Material Risk, carrying potential asset damage of tens of billions of dollars, and thus constitutes a Material Weakness in Microsoft's Internal Control Over Financial Reporting (ICFR).

Between November 7 and November 25, 2025, the Claimant sent Multiple Registered Notifications (including Ref. No. 24/11/3, Case Number: 03127826) to the Defendants, their external auditor (Deloitte & Touche LLP), and federal regulators (SEC, PCAOB, DOJ), demanding immediate preservation of evidence (Litigation Hold) and an independent forensic audit.